Wouldn’t it be fantastic to have a mentor or coach help you make decisions? Yes! An argument can be made that in a complex area like medicine, mentoring is not a nice-to-have, but is required to achieve meaningful behavior changes in clinical practice. Here, we will explore the value of corrective mentoring within medical education initiatives, show changes in competency within immersive programs using corrective mentoring, and highlight the underlying cognitive science of skill development that drives its effectiveness. Skill development serves as the bridge between “knowledge” and clinically treating patients.

Skill development serves as the bridge between “knowledge” and clinically treating patients.

Why Corrective Mentoring?

Most clinical medical education focuses on knowledge transfer with the assumption that the clinician will apply it appropriately with their patients. This assumption is often not valid. When learners are confronted with a real situation involving a complex topic, with many variables, and a high-stakes decision, they are less likely to apply what they learned because they lack both competence and confidence. The result: no change in behavior.

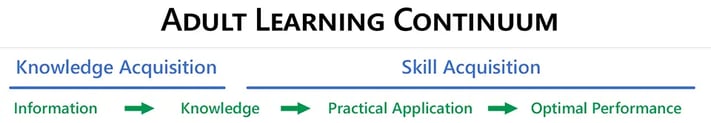

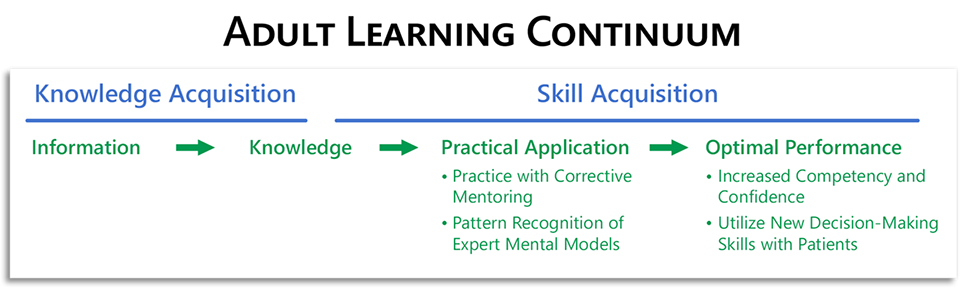

Practicing in realistic situations with expert corrective mentoring and coaching is a proven methodology to develop skills.1-7 It’s been successfully used across disciplines from aviation to athletics, in the arts, technical trades, corporate business training, and of course, medicine. It’s part of the learning continuum.

Continuing Medical Education Example

Syandus collaborated with Horizon CME in developing an activity on the AliveSimTM platform to close practice gaps in atopic dermatitis. The educational design of AliveSim is to place learners in authentic situations built around known practice gaps, and allow them to make decisions and then receive expert mentoring feedback.

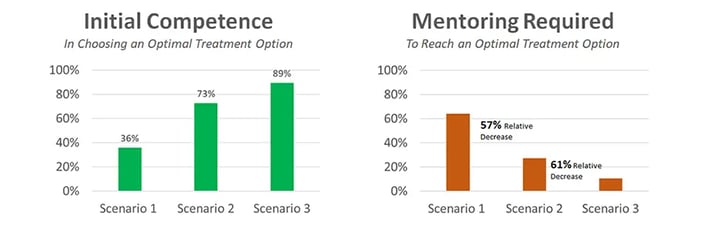

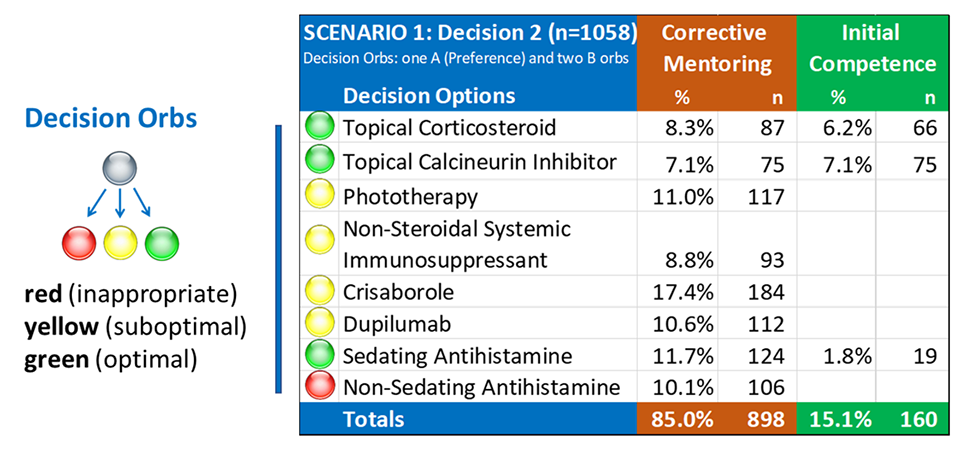

Learners were exposed to three different clinical situations in three separate scenarios, where they were to decide on a treatment strategy for severe atopic dermatitis. By design, there are two types of decision outcomes: learners can be initially competent, which means they selected an optimal therapeutic option on their first try, or they required corrective mentoring feedback before recognizing an optimal strategy.

In this example, 36% of learners’ decisions were initially competent and 64% needed significant corrective mentoring before recognizing the optimal options in the first clinical situation. In the second situation, 73% were initially competent, resulting in 57% less corrective mentoring required. By the third, similar situation, the mentoring needed dropped by another 61% and initial competence rose to 89%. The relative gain in initial competence was 147%. 97% of learners reported being more confident developing strategies for treating severe atopic dermatitis, suggesting they were both more competent and confident after the virtual practice with mentoring.

What Does Ideal Mentoring Look Like in Medical Education?

Ideally, one-on-one interactions with an expert mentor is what we all want! Unfortunately, personal feedback from experts is not an economically scalable model for medical education – even medical schools are struggling to find enough clinical mentors for their students.4

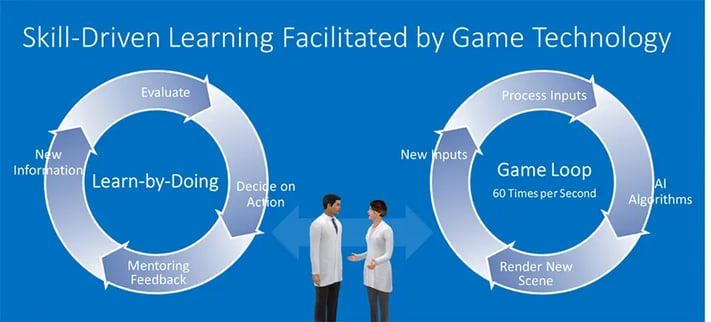

To circumvent this, AliveSim combined the cognitive science principles of skill development with a game engine loop to create an interactive experience with virtual patients, and most importantly, virtual peers, that respond with nuanced clinical conversation.

Three Criteria for Effective Corrective Mentoring

- Feedback must be authentic and conversational. Most medical decisions are not black and white. They are nuanced, with caveats, controversy, and points of view. The give and take of realistic conversation handles this mentoring feedback just like an expert would. Text responses are more like an assessment: “wrong,” followed by a rationale.

- Feedback should be fluid and in context, just like a real conversation. This means the delivered response is not from a large database that’s not contextual. Instead, the feedback elucidates the nuanced rationale in a specific situation where the learner decided to go with one of the less optimal choices.

- It’s a one-on-one, interactive, personal experience. This is critical to allow the learner to build on their own existing mental model to make more optimal decisions. A one-to-many webinar cannot provide the personalized practice and feedback needed for effective skill development.

Deeper Outcomes Reporting with Mentoring

Measuring performance is the holy grail in continuing medical education. Intent-to-change and follow-up surveys are not ideal measures. Since we cannot observe performance with patients, and even EHR data do not provide context, the best surrogate is to place target clinicians in virtual situations, where we can assess what they would do. Assessment alone provides data but it’s not helpful to the learner. For the learner to develop skill, we must add personalized corrective mentoring to each of their decisions. They then reflect and revise their decision based on the feedback. Fortunately, adding corrective mentoring provides a valuable new layer of data to analyze.

In a medical education activity, the result of all this decision-making and mentoring is a large dataset with thousands of decisions, all built from identified practice gaps used to construct the experience. These data not only provide insight into decision patterns, but also inform us on where additional educational interventions are needed.

As an example, in the decision data from the first decision in the AliveSim dataset above, we can see the decisions with initial competence (green decision orbs, first try) and decisions where corrective mentoring was needed for inappropriate or suboptimal options (red or yellow decision orbs respectively). Values for green orbs in the Corrective Mentoring column represent users arriving at an optimal choice after receiving correcting mentoring.

What Can We Learn from This Data?

- Initial Competence. Measure how many learner decisions were initially competent in specific clinical situations and whether decision making for a practice gap improved over time.

- Corrective Mentoring. By capturing the specific context where mentoring was needed, we can understand the clinical areas where clinicians struggled before recognizing an optimal path.

- Analyze Cohorts. Learner decisions can be further analyzed in cohorts based on professional designation, specialty, or experience level.

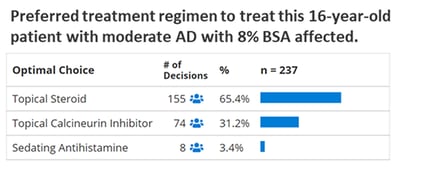

- Clinician Preferences. Often there is more than one optimal choice in a clinical situation. While we may want learners to identify all the optimal options – including new drugs, new indications, new tests, etc. that they may not have thought about before – we can also capture their preferred option in specific situations.

Here are the clinician preferences (their first optimal choice) from the dataset above:

Utilizing Expert Mental Models in Corrective Mentoring

In complex decision-making tasks where there are many variables (i.e., patient presentation, treatment history, comorbidities, lab and imaging results, etc.), experts have developed cognitive maps (mental models) that guide their decision making. The challenge for us as educators is that this cognitive process, and the underlying mental model employed by these experts, is invisible to us.3 Because of the topic complexity, we must first identify practice gaps, and then extract the mental models that expert faculty use to close these gaps for themselves. That is, what are the decision options considered, and then which ones are optimal in one situation versus other situations. Their feedback, transformed into a conversation, becomes the nuanced expert mentoring dialogue that is used within AliveSim to emulate a mentor virtually.

Additional Medical Education Examples

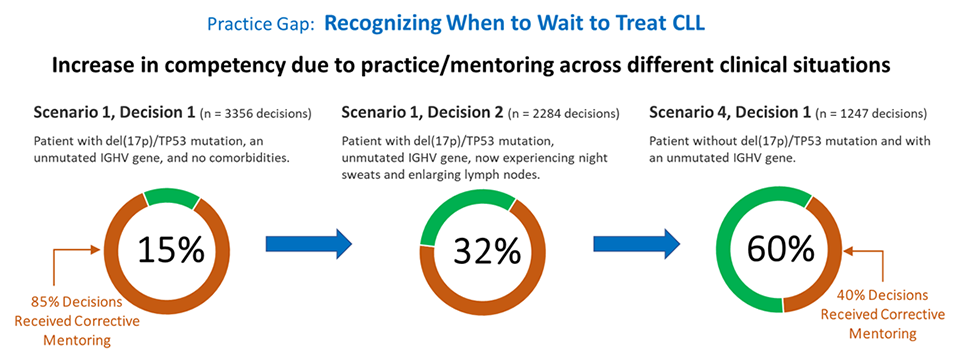

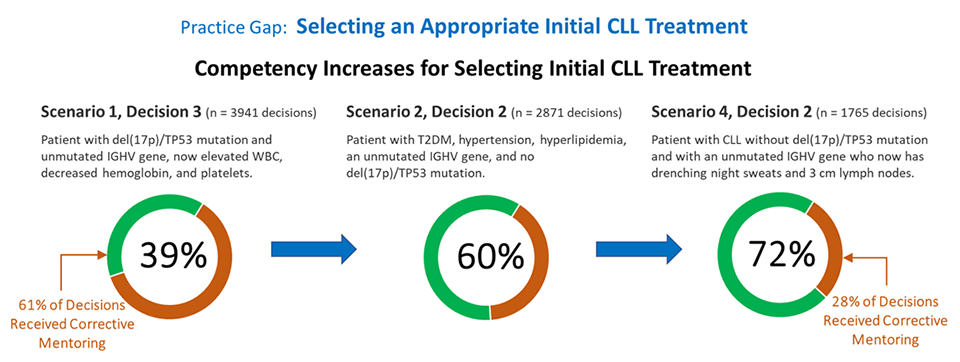

As another example, Syandus collaborated with NCCN to develop an AliveSim program in chronic lymphocytic leukemia (CLL). In this first example, the practice gap was recognizing when to treat CLL to avoid overtreatment too early. As shown below, over the course of three separate situations, initial competency increased fourfold. 95% of the learners reported being more confident in recognizing when to treat CLL.

In another example, learners practiced appropriate initial CLL treatment for patients with varied comorbidities, symptoms, and cytogenetics. Here we see a similar trend with 85% relative gain in competency in recognizing the optimal options after working through three clinical situations.

In skill development terms, the learners became more competent in recognizing the decision patterns that experts use, through practice with mentoring in three different situations. During design, we are often asked: “Why do another scenario, we covered that?” That’s because the key to effective skill development is to practice in multiple situations! This is a significant paradigm shift from knowledge-focused medical education, and an important one. This practice builds the competence and confidence for physicians to incorporate complex new strategies into their practice.

Final Thoughts

Skill development is the bridge between “knowledge” and clinically treating patients. It’s the key to meaningful behavior change in complex domains like medicine. Proven skill development methodology involves identifying practice gaps and having experts set up situations and personally mentor learners through their decisions. While this is expensive and difficult to scale in practice, software engineering solutions like AliveSim can provide a surrogate environment to implement skill-driven learning at scale in medical education initiatives, and capture learner decision data for insightful outcomes reporting.

References

- Theoretical Foundations of Learning Environments. Jonassen and S. Land, editors, Routledge, 2012.

- Anders Ericsson, K., Deliberate Practice and Acquisition of Expert Performance: A General Overview. Academic Emergency Medicine, 2008;15: 988-994. https://doi.org/10.1111/j.1553-2712.2008.00227.x

- Collins, Allan, John Seely Brown, and Ann Holum. Cognitive apprenticeship: Making thinking visible. American educator3 (1991): 6-11.

- Stalmeijer, R.E., Dolmans, D.H.J.M., Wolfhagen, I.H.A.P. et al. Cognitive apprenticeship in clinical practice: can it stimulate learning in the opinion of students? Adv in Health Sci Educ 2009;14, 535–546. https://doi.org/10.1007/s10459-008-9136-0

- Kolb D. Experiential Learning: Experience as the source of learning and development. Prentice Hall, Upper Saddle River, NJ. 1984.

- McGaghie WC, Draycott TJ, Dunn WF, Lopez CM, Stefanidis D. Evaluating the impact of simulation on translational patient outcomes. Simul Healthc. 2011;6 Suppl:S42-7.

- Motola I, Devine LA, Chung HS, Sullivan JE, Issenberg SB. Simulation in healthcare education: a best evidence practical guide. AMEE Guide No. 82. Med Teach. 2013 Oct; 35(10):e1511-30.

About Syandus: Virtual immersive learning technology that transforms knowledge into real-world performance. We immerse participants in realistic virtual situations with one-on-one expert coaching that gives them experience making optimal decisions. Syandus Learning Modules combine cognitive science principles, the realism of game technology, and our customer’s proprietary content, to deliver rapid skill acquisition. Modules are cloud-based for easy deployment, fully trackable with embedded analytics, and can be used on any web-enabled device.